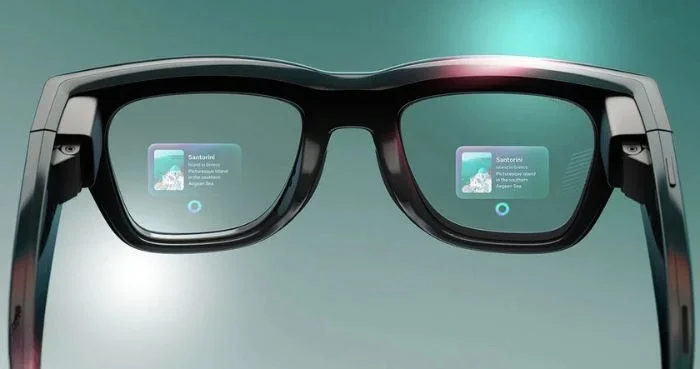

Meta has announced a significant update to its AI-powered smart glasses, introducing new features that enhance both auditory assistance and entertainment integration. The updated device now includes a “hearing aid” style functionality, which leverages advanced audio processing to amplify environmental sounds, making it easier for users to hear conversations, ambient noise, and other important auditory cues. This feature is designed to support individuals with mild hearing difficulties while also providing a more immersive and responsive audio experience for all users.

In addition to auditory enhancements, the smart glasses now offer direct integration with Spotify, allowing users to stream music, podcasts, and other audio content seamlessly through the device. This integration ensures that users can control playback, skip tracks, and adjust volume without needing to reach for their smartphones, making the glasses a more versatile companion for both daily activities and travel.

Meta’s latest update reflects the company’s broader push to combine augmented reality, AI, and wearable technology into a single ecosystem that supports communication, entertainment, and accessibility. The glasses retain their core functionalities, including real-time AI transcription, gesture controls, and notification alerts, while the new audio features expand their appeal to a wider audience.

Officials say the hearing aid-style feature uses adaptive sound processing to automatically prioritize voices over background noise, making it effective in crowded or noisy environments. With Spotify integration, users can also enjoy a hands-free music experience, enhancing productivity and leisure without disrupting daily routines.

Overall, these updates position Meta’s AI glasses as not only a smart wearable but also a multi-functional device bridging the gap between accessibility, entertainment, and AI-assisted technology.